In recent years our world's been radically transformed by technology. Every major part of our life is different because of it and certainly science hasn't escaped this trend either. Hundreds of online and open-access journals have emerged lately, scientific publications that rely on electronic formats to distribute its content for a reduced cost, sometimes even without charge for the readers. This is a phenomenon that I personally consider quite positive, since it signals a more open, easily approachable focus on scientific literature. It's also an innovative way to ensure that many of us, researchers and professionals, have quick and affordable access to the latest developments in our disciplines. Still, despite the obvious and clear advantages of this approach, it has also produced a few problems that the scientific community has just begun to spot. Problems that we will have to solve in the not too distant future.

In October of this year, Science published an interesting project that involved sending false scientific articles to several online journals as a means to test the efficacy and seriousness of the review process. Just the methodology in itself is quiet interesting. The author produced a false scientific paper, with some fake data and some marvellous, quiet shocking conclusions. A very poorly summarized version of the piece states that Molecule X from lichen species Y inhibits the growth of cancer cell Z. A computer program generated over 300 hundred slightly different versions of the article, with different presentations and a different wording and format. Still, the scientific content of the text and the data presented was unaltered and exactly the same on every copy. To submit the paper, hundreds of false names and institutions were generated, most based in African countries (that way no one would worry if the Wassee Institute of Medicine, located in Eritrea, had no known website).

The interesting part comes from the fact that the article has some serious, immediately observables flaws in both design and methodology. Among them, the use of non comparable treatments for subjects in the control group or the fact that some effects were not isolated at all, which was even acknowledged in the text. For instance, in one of the experiments cancer cells were exposed to the molecule and radiation exposure. The cell growth for this treatment was compared against a control group that received neither radiation nor the molecule. The fake author then claimed that the inhibition of cell growth was explained mostly by the molecule, thus ignoring the effect that radiation had in the growing of cancer cells. In practice, any researcher should tell that, since the effects were not correctly isolated, not much can be interpreted from this.

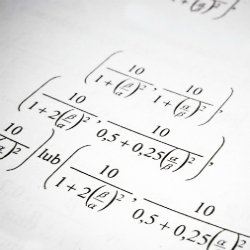

Of special attention is the fact that one of the flaws in the article could be easily spotted by an incorrect interpretation within the very first statistical chart in the text. The fake author claimed that the chart shows a 'dose-dependent' effect on cell growth. The molecule is tested across five orders of magnitude of concentrations, to compare the effect on the cancer cells this molecule produces. In the paper sent, a form of dotplot is presented to support this insight. But the graph shows identical effects at every concentration, thus producing an image that in no way points to a significant difference. Still, the footnote clearly states that the 'author' considers this graph as a clear indicative of difference that, well, just does not exist. Details like this, that seriously undermines the results obtained by the research were a part of every submission, so this bogus contribution to science, which was quite flawed and should be easily dismissed by every reviewer in the world, ended up in the inbox of many journal editors.

John Bohannon, the author of this interesting exercise, presented his results online. Of all the 304 submissions that were made in a period of about six months, 157 of the journals (roughly half of the submissions) had accepted the paper, while only 98 had rejected it. Of the remaining 49 journals, 29 appeared to be no longer operative and 20 were still debating the worthiness of the text. The article was accepted for publication not only in small unknown journals, but also in publications hosted by industry giants and even in some associated with prestigious academic institutions. It was accepted in journals published in the US, Europe and Asia. It was even accepted by a journal devoted to Reproductive Research, in which the paper's topic wasn't even appropriate. Interestingly enough, after being approved by the journals, the fake 'author' responded mentioning that he had indeed found some serious mistakes by himself in the design and for that reason he would withdraw the article.

Why half of the journals, all peer reviewed journals, ended up accepting such a flawed research? Wouldn't that affect their credibility and even the credibility of the research published before? One of the problems that the online journals have is the pay-to-publish model. This idea of charging the researcher a fee for publishing their results was linked to some of the ineffective review processes (one journal that approved the fake article, was ready to charge $3,100 USD for publication fees). But even after acknowledging the impact that such practice has in the approval process, this implies that the main problem may not be the desire of greater income, but a real lack of proper reviewing. The results show that of the 255 papers that underwent the entire editing process to acceptance or rejection, about 60% of the final decisions occurred with no sign of peer review. For rejections that may sound good, but that also means that many journals actually approved without signs of a proper reading.

Although the experiment was devised to target exclusively open access journals, the author states that the flaws are not inherent to the online process, and may very well be present in printed publications, though no evidence is provided on the matter. Still, it is clear that the conclusions aim for a more thorough understanding and application of the peer review process, which seems to be at the center of this discussion. Certainly, this is not a new concern, but one that has been widely regarded before in serious scientific publications. But the results just discussed are a clear indication of a problem that has in fact increased and that, in the context of the exponentially growing online journals, may become a particularly noteworthy topic. Some journal editors, after being informed of the scam article, claimed that the review process has to rely heavily on the good will and ethical procedures of the researchers. Although it's evident that such a biased article wouldn't be sent for publication with such evident mistakes, it is not guaranteed that some smaller, yet significant errors may occur. We all know that statistical mistakes happen and happen very often among many research areas. The reason why we need peer reviews is because we believe that even the brightest minds and even the purest intentions can still lead to mistakes. Humans are imperfect and we hope that other humans may be able to notice it.

Karl Popper, one of the greatest philosophers of science, stated that: 'A rationalist is simply someone for whom it is more important to learn than to be proved right; someone who is willing to learn from others – not by simply taking over another's opinions, but by gladly allowing others to criticize his ideas and by gladly criticizing the ideas of others”. This is why reviews are important, because we need to criticize each other, not for confronting, nor for glory, but because a fine review is a nice intellectual conversation in which both parties learn from each other.