Statistical ideas are linked to various Nobel Prizes, but are too rarely acknowledged. It’s time to highlight statistics as a leading driving force behind scientific breakthroughs.

The recent Nobel Prize in Physics, awarded in 2024 to Geoffrey Hinton and John Hopfield, put neural networks and AI in the headlines. I was especially pleased with this outcome, as Geoffrey Hinton, an Edinburgh PhD alumnus known as the “godfather of AI” and John Hopfield, renowned for his work on Hopfield networks, have made some of the most brilliant contributions to AI.

However, I felt disappointed for statistics. This is another Nobel Prize influenced by statistical ideas, yet another missed opportunity for recognition.

Let’s talk about physics

The press release for the award contains some details on the links between core concepts from physics (e.g., atomic spin, Boltzmann machines, energy) and the neural models that the winners proposed. Still, even Geoffrey Hinton himself told the New York Times that:

“If there was a Nobel Prize for computer science, our work would clearly be more appropriate for that.”

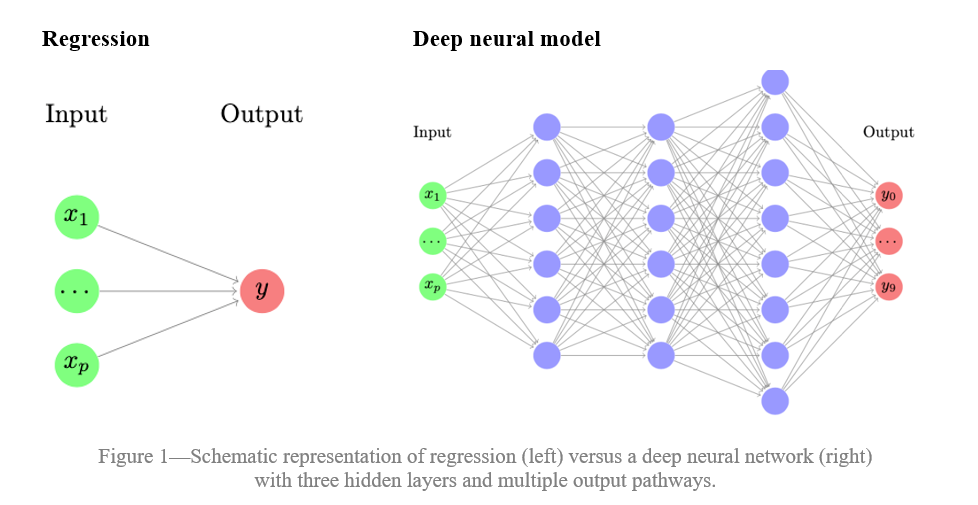

Many of the groundbreaking methods developed by the laureates can be regarded as extensions of standard regression models – a cornerstone of statistics. While standard regression methods map inputs (data) to outputs (predictions) through a fitted line, neural models extend this by adding hidden layers. In other words, regression is a layer-free approach, producing predictions directly from inputs in a linear fashion, while neural networks use hidden layers that transform inputs through weighted sums and nonlinear activation functions; all in all, this enables neural models to learn more complex patterns and interactions, going beyond the predictive capabilities of traditional regression approaches. See Figure 1 for a schematic representation contrasting both approaches.

Quoting Efron1, once neural models were more established in the 1980s:

“The knee-jerk response from statisticians was “What’s the big deal? A neural network is just a nonlinear model, not too different from many other generalizations of linear models.”

The big deal is taking shape. It has been over twenty years since Léo Breiman’s influential paper on the two cultures of data modeling – model-based vs. algorithmic-based – sparked widespread discussion2. Neural networks, initially dismissed as simple nonlinear models, have now become a pivotal component of the algorithmic culture, bridging the gap between traditional regression methods and neural models. Still, their main success has been in prediction tasks and their merit addressing causal or associative-focused research questions is still a topic of debate and ongoing research.

Neural methods are conceptually not all that statistically complicated, their groundbreaking nature comes from their application. (Kai-Fu Lee3 describes the recent shift from the age of expertise to the ages of ‘implementation’ and ‘data’.)

A paper co-authored by Hinton for Nature offers an overview on deep neural models4.

Let’s talk about economics

The first Nobel Prize in Economics was awarded to Ragnar Frisch and Jan Tinbergen in 1969. Although not one of the original five Nobel Prizes established by Alfred Nobel in 1895, it is commonly referred to as the Nobel Prize in Economics and is administered by the Nobel Foundation alongside the other Nobel Prizes. In the opening sentence of its speech, The Royal Swedish Academy of Sciences stated the following:

“In the past forty years, economic science has developed increasingly in the direction of a mathematical specification and statistical quantification of economic contexts.”

Has the paradigm fundamentally changed since then?

Ben Bernanke, now a Nobel Laureate in Economics, was once the Chairman of the Federal Reserve and over the years has written several academic papers, in the field of economics, that employ statistical analyses. The Royal Swedish Academy of Sciences noted the following about his work:

“Through statistical analysis and historical source research, Bernanke demonstrated how failing banks played a decisive role in the global depression of the 1930s.”

Let’s look at another recent example. In 2021, three economists, including Joshua Angrist and Guido Imbens, were awarded the Nobel Prize in Economics for their “methodological contributions to the analysis of causal relationships.” Causal inference is a highly active research field in statistics, studying causes and effects and recognising that correlation does not imply causation. Causal inference is particularly valuable in the social context, as it is often impossible to conduct randomised experiments in economics, where groups of individuals are divided randomly and one group is subjected to a treatment (e.g., a vaccine). In economics, practitioners do not have access to two parallel realities – for example, one where a government lowers taxes and another where it does not. Nor would it be socially desirable for a government to reduce taxes only for a randomly chosen group of individuals, while not doing so for another group in similar economic conditions. Causal inference thus addresses statistical inference under these challenges, and continues to develop a range of statistical tools to tackle them. In this Youtube video, Guido Imbens discusses causal inference with his kids. For a deeper dive into causal inference, refer to the Imbens and Rubin monograph5.

Taking stock

Needless to say, the above are just a few recent examples – statistical ideas have played a crucial role in many other Nobel Prizes and other prominent scientific awards (e.g., 2022 Fields medalist Hugo Duminil-Copin, who was recognised for his work in statistical physics).

Coming back to the article’s headline: partners in excellence? Absolutely. Yet, we can elevate our efforts in promoting the field. Every statistician plays a part, but it is up to the statistical associations to lead and take bold action.

Statistical associations must urgently elevate public awareness of the vital role of statistics in Nobel Prize-winning research and other major contributions. While some initiatives exist, dedicated ‘stats in science’ taskforces should be established to highlight statistics as a leading driving force behind scientific breakthroughs. These should bring together academics, science communicators, and the media to deliver this message. Taskforce members should issue press releases, write op-eds, and give interviews, showcasing how statistics powers these achievements. Podcasts will serve as an additional channel to engage the public and showcase the indispensable role of statistics in advancing science.

Will statistical associations remain in the background, or will they step up to claim our field’s rightful place at the forefront of science?

References

- Efron, B. and Hastie, T. Computer Age Statistical Inference, CUP: Cambridge, MA, 2021. doi.org/10.1017/CBO9781316576533

- L. Breiman, L. Statistical modeling: The two cultures. Statist. Sci. 16(3): 199-231 (August 2001). doi.org/10.1214/ss/1009213726

- Lee, K. AI Superpowers: China, Silicon Valley, and the New World Order, Houghton Mifflin: Boston, 2018.

- LeCun, Y., Bengio, Y. and Hinton, G. Deep learning. Nature 521 (2015), 436–444.www.nature.com/articles/nature14539

- Imbens, G and Rubin, D.B. Causal inference in statistics, social, and biomedical sciences. CUP: Cambridge, MA, 2015. doi.org/10.1017/CBO9781139025751

Miguel de Carvalho is the chair of statistical data science at the University of Edinburgh and holds an honorary chair of statistics at University of Aveiro.

You might also like: AI: statisticians must have a seat at the table

Photo: Stockholm, Sweden – July 29, 2024: Portrait of Alfred Nobel on the doors to the Nobel Prize Museum. Bumble Dee/Shutterstock